Better Sensors for All-Weather Smart Cars

Sensors in autonomous vehicles (AVs) help keep drivers, passengers, and pedestrians safer. But, various weather conditions can limit their sensing technology. Find out what innovations researchers and engineers are developing to overcome this obstacle.

Automotive sensors such as cameras, radar, ultrasonic, and LiDAR sensors help autonomous vehicles (AVs) safely navigate roads. But, bad weather conditions can make navigation a challenge.

In this article, we talk about the strengths and weaknesses of these different sensors. We’ll also learn about recent innovations that could make AVs “see” even better.

Sensors 101: How AVs see

Let’s take a closer look at these different perception technologies used in autonomous vehicles today.

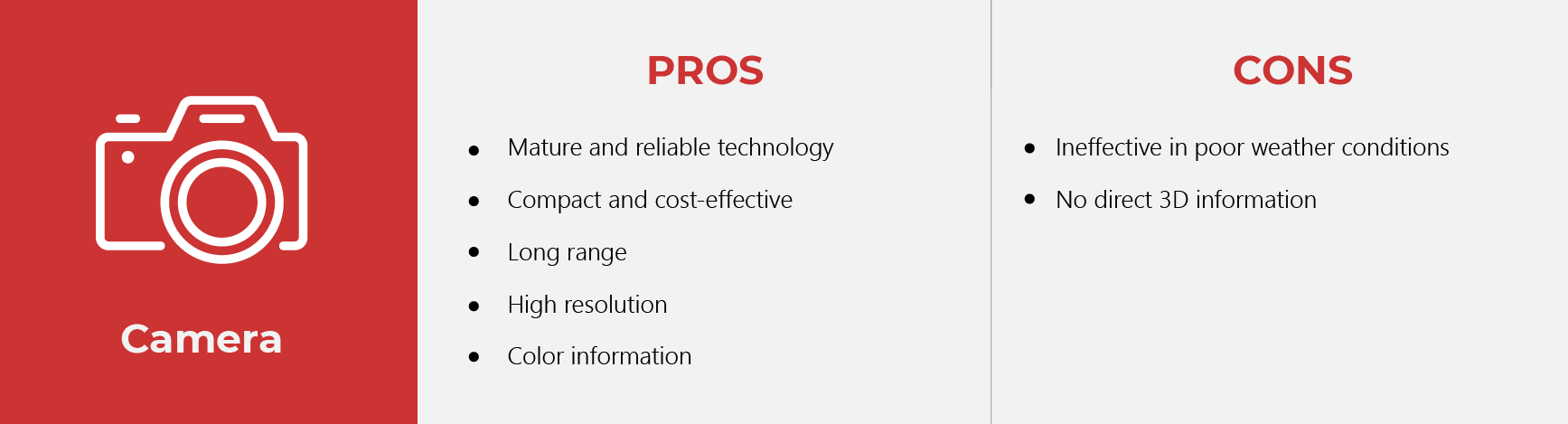

Camera

Cameras are now a fundamental part of new vehicles. They help drivers maneuver and park more easily, and enable systems such as lane departure warning and adaptive cruise control. With the aid of software, they can detect and identify both stationary and moving objects such as road markings and traffic signs.

However, cameras can only detect objects if they are illuminated, making them less reliable in difficult environmental conditions such as fog, snow, ice, and darkness. And while they’re good at imaging, they can’t detect range and distance on their own.

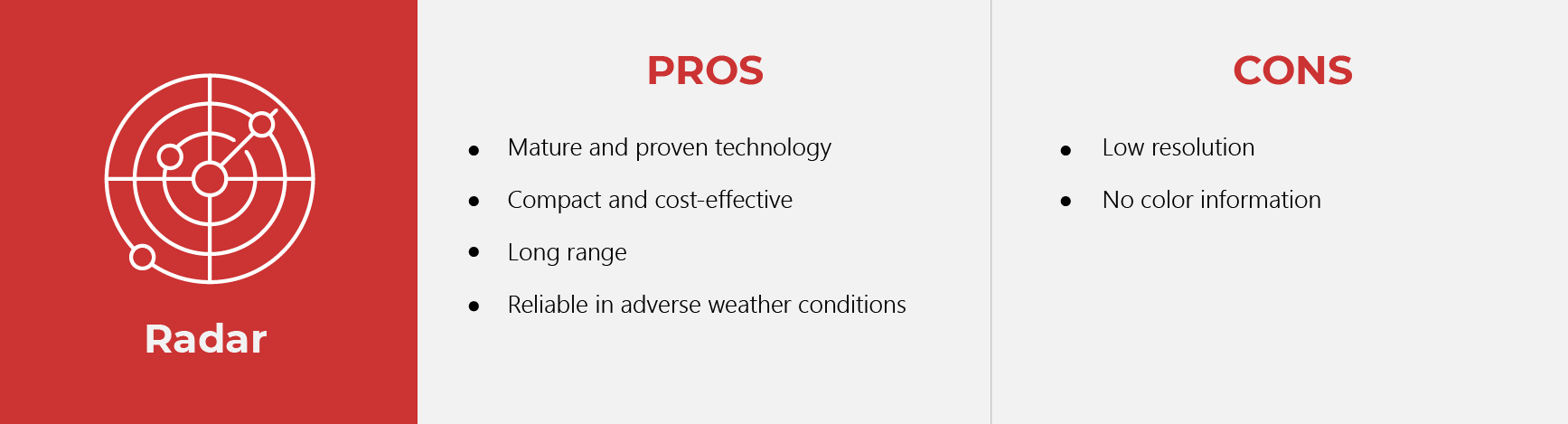

Radar

Widely utilized in seafaring and aerospace, radars have been used in vehicles for years. Sensors fire radio waves in pulses at a target area, which bounce back to the sensor. This provides information on the speed and location of the object. This technology enables driver assistance systems such as collision avoidance, automatic emergency braking, and adaptive cruise control.

Radar sensors are inexpensive, robust, and weather-resistant. However, one weakness is its low resolution. It can detect objects, but not identify or classify them.

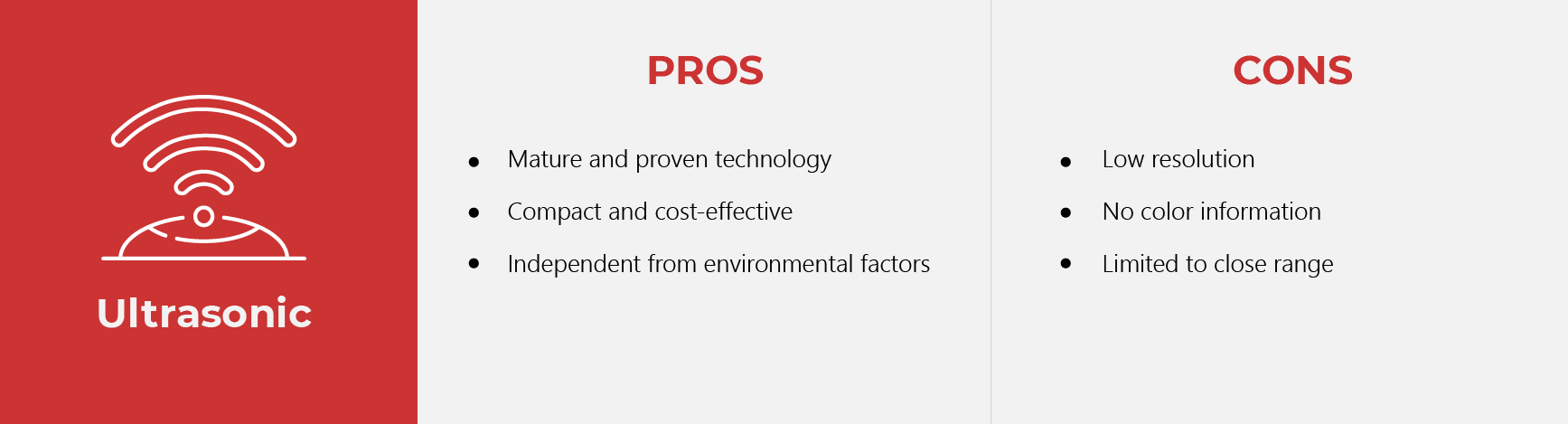

Ultrasonic

Using high-frequency sound waves that are inaudible to the human ear, these sensors measure distances and are commonly used for parking assistance systems. Able to detect objects regardless of color or material, they provide reliable and precise distance information at night and in fog. However, this cost-effective and proven technology can only be used at close range.

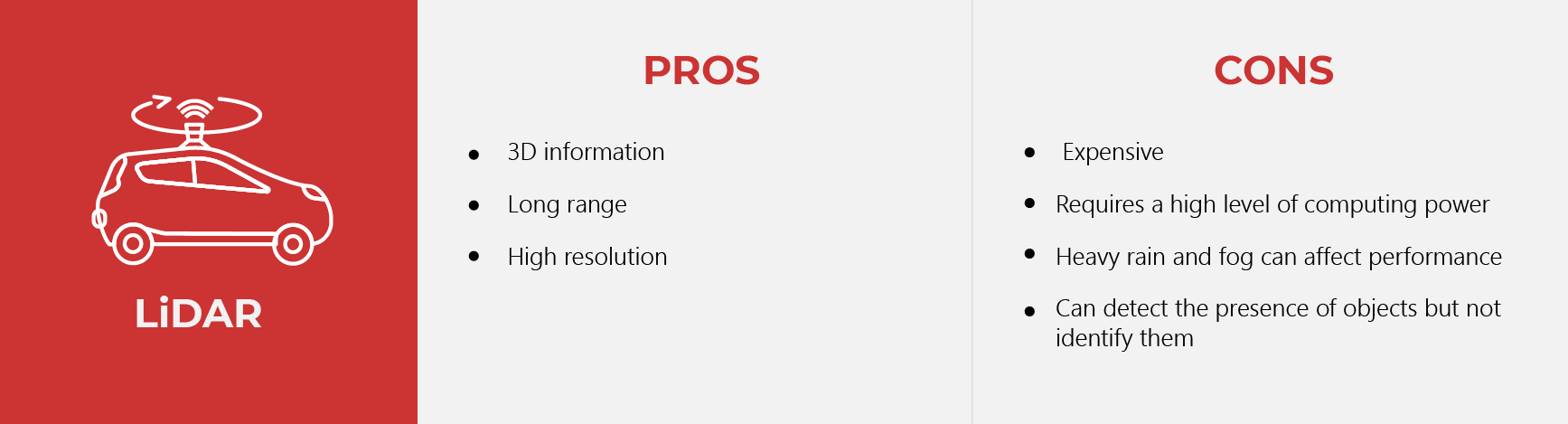

LiDAR

LiDAR stands for “light detection and ranging” and was originally developed as a surveying technology. Invisible laser light is used to measure the distance to objects. Emitting up to one million laser pulses per second, these sensors produce a high-resolution, 360-degree view of the environment in 3D.

However, LiDAR systems can be affected by heavy fog and severe downpours. LiDAR sensors also require a huge amount of processing power to translate measurements into actionable data. Furthermore, “the technology is only re-creating an image of its surroundings, rather than getting a visual representation of what’s going on,” as Chris Teague explains in an article for Autoweek. “LiDAR is more than capable of identifying that there is something in the road that needs to be avoided, but is not able to tell exactly what it’s looking at.”

LiDAR sensors are expensive, costing as much as ten times that of a camera and radar. That may change soon, though. More than a decade ago, Velodyne introduced the first three-dimensional LiDAR sensor that cost around USD 75,000. The company’s new model, smaller than its predecessors and with no moving parts, could be sold at around USD 100 in high-volume production.

American startup Luminar Technologies has also developed a low-cost LiDAR that bundles hardware and software. The company has two versions of its Iris system for customers: one priced at under USD 1,000 for high-volume production and the other at under USD 500.

Check out this video on the basics of AV sensing technologies.

Innovations in sensors

The weather may be unpredictable, but sensors in AVs have to be reliable. What innovations and research projects can make sensors dependable come rain or shine, heat waves or heavy snow?

Sensor fusion in the snow

Residents of the Keweenaw Peninsula in Michigan get an average of more than 200 inches of snow every winter, making it the perfect location to test AV technology.

At the SPIE Defense + Commercial Sensing 2021, researchers from Michigan Technology University presented two papers that discussed solutions for autonomous vehicles in snowy driving conditions.

As we’ve seen earlier in this article, every sensor has its strengths and limitations. Combining data from multiple sensor technologies eliminates the weaknesses of any one type of sensor. This process, called sensor fusion, uses AI to create a single image or model of the vehicle’s environment.

"Sensor fusion uses multiple sensors of different modalities to understand a scene," said Nathir Rawashdeh, assistant professor of computing at Michigan Tech's College of Computing and one of the study’s lead researchers.

"You cannot exhaustively program for every detail when the inputs have difficult patterns,” Rawashdeh explained. “That's why we need AI."

The team collected local data by driving a Michigan Tech autonomous vehicle in heavy snow. To teach their AI program what snow looks like and how to see past it, the team went over more than a thousand frames of LiDAR, radar, and image data from snowy roads in Norway and Germany.

Here’s a companion video to the team’s research. The video shows how the AI network processes and fuses each sensor’s data to identify drivable (in green) and non-drivable areas.

Two radars are better than one

A team of electrical engineers at the University of California San Diego has developed technology to improve how radar sees.

"It's a LiDAR-like radar," said Dinesh Bharadia, a professor of electrical and computer engineering at the UC San Diego Jacobs School of Engineering. "Fusing LiDAR and radar can also be done with our techniques, but radars are cheap. This way, we don't need to use expensive LiDARs."

A pair of millimeter radar sensors are placed on the vehicle hood, spaced an average car’s width apart at 1.5 meters. This placement enables the system to see more space and detail than one radar sensor.

To test their system, the team used a fog machine to “hide” another vehicle. The system accurately predicted its 3D geometry, as seen in this video.

“By having two radars at different vantage points with an overlapping field of view, we create a region of high-resolution, with a high probability of detecting the objects that are present,” said Kshitiz Bansal, a computer science and engineering Ph.D. student at UC San Diego.

But more radars also mean more noise: random points that do not belong to any objects. The sensors can also pick up echo signals, which are reflections of radio waves that do not come from the objects that are being detected. To solve this, the team developed new algorithms that can combine data from the two radar sensors to produce a new image free of noise.

The UC San Diego team also constructed the first dataset combining data from two radars. It consists of 54,000 radar frames of driving scenes in various conditions.

“There are currently no publicly available datasets with this kind of data, from multiple radars with an overlapping field of view,” Bharadia said. “We collected our own data and built our own dataset for training our algorithms and for testing.”

The UC San Diego researchers are working with automaker Toyota to combine their radar technology with cameras.

Autonomous vehicles could increase road safety, reducing dangerous driving behaviors or human errors that could cause accidents. They also offer those with disabilities more personal freedom, enabling them to travel whenever and wherever they want. Innovations in sensing technologies can help make roads safer and more enjoyable for drivers, passengers, and pedestrians.

As one of the Top 21 EMS companies in the world, IMI has over 40 years of experience in providing electronics manufacturing and technology solutions.

We are ready to support your business on a global scale.

Our proven technical expertise, worldwide reach, and vast experience in high-growth and emerging markets make us the ideal global manufacturing solutions partner.

Let's work together to build our future today.