3 Breakthrough Ways to Train Robots

Researchers are unveiling fresh manufacturing solutions with three groundbreaking approaches to robot training. One team created a flexible interface that lets people teach robots new skills through simple, intuitive guidance. Another introduced a system that enables robots to be controlled using only a single camera. Adding to these advances is an AI-driven framework that allows machines to learn tasks directly from just one instructional video. Together, these innovations mark a leap toward smarter, more adaptable robotics in industrial manufacturing.

For decades, robots have been trained through rigid, hands-on methods, such as teach-pendants, lead-through programming, and offline simulation environments. Traditionally, operators had to guide robots manually using controllers or map out movements in simulated environments before testing them in real-world conditions. These approaches, reliable for repetitive mass production, are time-intensive, costly, and inflexible, especially when tasks or products change.

Now, driven by leaps in AI, machine learning, and other tech, a new generation of future-ready training methods is emerging, designed around intuition, adaptability, and minimal engineering overhead. The result? A new wave of intuitive, self-teaching robots is taking shape—less reliant on laborious programming and more capable of analysis, adapting, and operational efficiency.

(Also read: Factories Made for the Future)

Robotic Training Breakthrough #1: Learning by Doing

Training a robot has long been the domain of design and engineering teams fluent in code. But Massachusetts Institute of Technology (MIT) researchers are now pushing toward a future where almost anyone can show a robot how to perform a task. Central to this shift is a new handheld interface that gives machines the ability to learn in three distinct ways.

To streamline this process, engineers created a versatile demonstration interface (VDI)—a device with sensors and a mounted camera that attaches to a robot’s arm, giving it detailed feedback on motion. This allows people to guide robots in multiple modes: remotely piloting them through a joystick, physically moving their arms to demonstrate a task, or detaching the device and using it directly, with the robot later replaying the captured motions. By recording pressure, movement, and visual cues, the interface collects rich data that robots can then use to teach themselves.

Researchers conducted product testing by introducing the system in a real-world setting. Volunteers were asked to train a robot on two common factory-floor tasks. In one, the robot learned to press-fit pegs into holes, a motion often seen in fastening processes. In another, it was guided through molding, rolling a pliable substance evenly across a rod, similar to shaping thermoplastic materials.

Feedback from the participants was revealing. While experts saw value in all three training modes, many favored the “natural” approach of directly demonstrating the task, describing it as the most intuitive. Still, teleoperation was recognized as safer for handling hazardous materials, while kinesthetic guidance proved useful for heavy-lifting scenarios. The experiment underscored a test and system development trend: as manufacturing evolves, robots are starting to learn in ways strikingly close to human experience.

Robotic Training Breakthrough #2: Self-Aware Robots

Inside MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), a soft robotic hand reaches for a small object. What makes this moment striking isn’t the hardware, but what’s missing: no intricate sensors, no finely tuned mechanics. Instead of relying on extra equipment or expensive sensor networks, they developed a vision-based system where a robot simply watches itself through a single camera.

This ability comes from a new approach called Neural Jacobian Fields (NJF). By analyzing its own random motions, the robot gradually learns how its body responds to control signals, building a form of self-awareness that helps it adapt to new tasks.

At the core is a neural network that captures two things at once: the robot’s 3D form and how each point on its body reacts when motors fire. The method draws inspiration from neural radiance fields (NeRF), which map images into 3D scenes. NJF pushes this further by tying geometry to movement, effectively giving robots the ability to “see” and predict their own actions with the accuracy of precision machinery.

Training begins with multiple cameras recording as the robot moves without guidance. From this data, the system infers how commands translate into motion, requiring no human input or prior structural knowledge. Once trained, however, the robot relies on camera vision technology for real-time control at around 12 Hertz—a practical speed compared with physics-heavy simulations that often lag too much for use in soft robotics.

Future versions could be trained with nothing more than smartphone footage, making advanced control systems accessible outside research labs. If realized, robots might soon adapt to farms, construction sites, and unpredictable environments with ease, supporting innovation in product development.

(Also read: Top Tech Tools to Take Your Manufacturing to the Next Level)

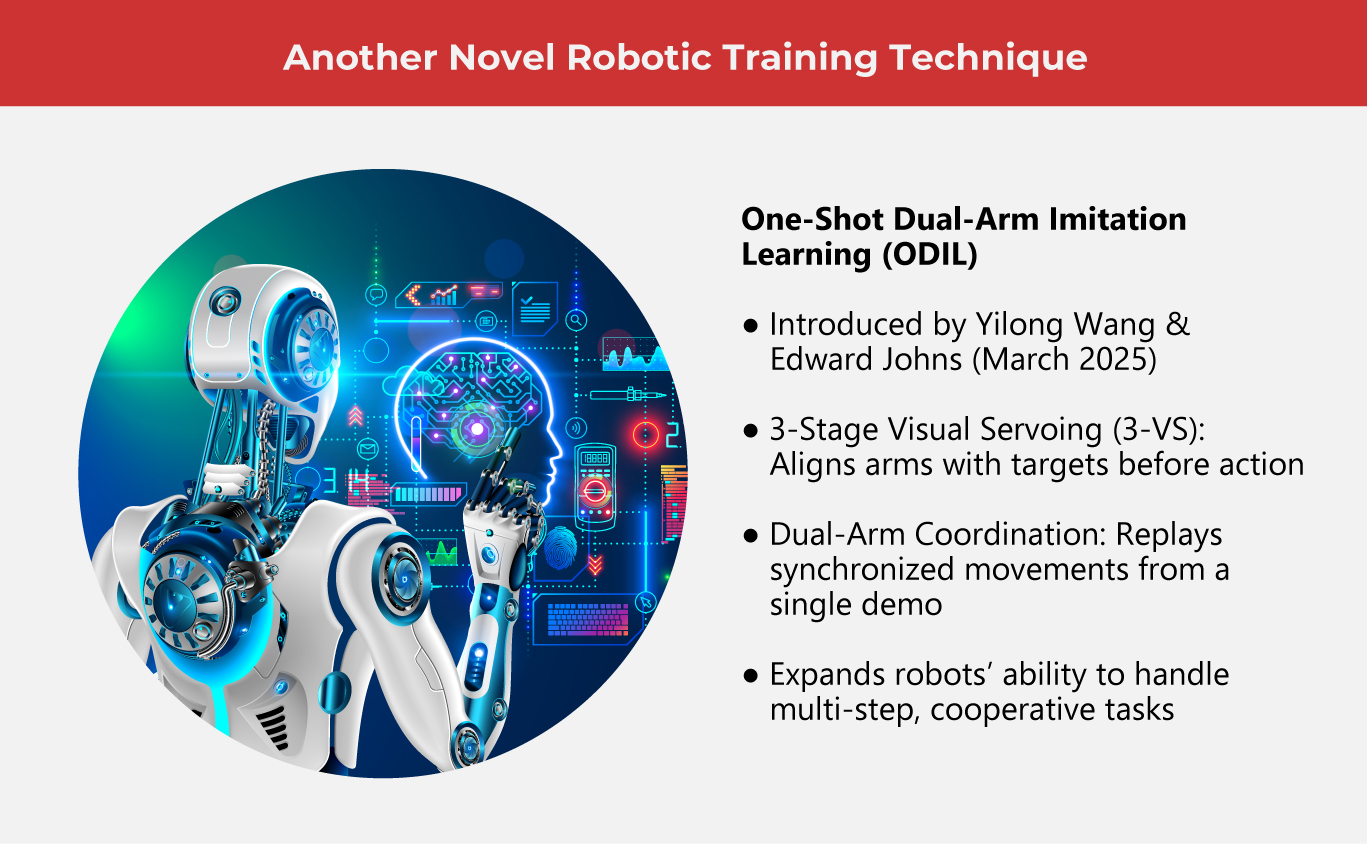

Robotic Training Breakthrough #3: Trained by How-To Videos

At Cornell University, scientists have been experimenting with new ways to train robots, presenting a method they call RHyME (Retrieval for Hybrid Imitation under Mismatched Execution). Unlike older training systems that demand hours of step-by-step programming, this approach allows a robot to pick up an entire task after seeing just a single instructional video.

The concept draws inspiration from imitation learning, a machine-learning technique where robots copy human demonstrations to understand how to complete specific actions. Until now, this approach has been hampered by practical obstacles. Human movements are often too fluid and nuanced for a robot to mimic directly, while video-based training usually demands slow, flawless demonstrations across countless repetitions. However, because robots and humans don’t move in exactly the same way, even small differences can make the training fail.

RHyME addresses these limitations by making robots more adaptive and less rigid in how they interpret demonstrations. Instead of simply copying what they see, robots equipped with RHyME draw on their own memory of past training videos to fill in the gaps. If a robot watches a person complete an action, it can connect the movement to similar experiences and piece together a successful sequence.

The results are promising. Robots trained with RHyME required only about 30 minutes of direct data, yet achieved over a 50% improvement in task success compared to older methods. This efficiency supports product validation and could make training robots in real-world settings far more practical, particularly for complex, multi-step jobs.

By reducing the data burden and enabling machines to adapt more like humans do, RHyME represents a step toward robots that can handle everyday environments with ease. It offers a glimpse of a future where teaching a robot could be as simple as pressing play on a video.

The landscape of robotic training is rapidly shifting from rigid programming to intuitive, human-like learning. This points toward a future where robots adapt faster, collaborate smarter, deliver greater economic value, and integrate seamlessly into real-world environments with minimal technical barriers.

As one of the Top 20 EMS companies in the world, IMI has over 40 years of experience in providing electronics manufacturing and technology solutions.

We are ready to support your business on a global scale.

Our proven technical expertise, worldwide reach, and vast experience in high-growth and emerging markets make us the ideal global manufacturing solutions partner.

Let's work together to build our future today.

Other Blog